【超快速教學】如何在Docker環境內使用GPU跑Tensorflow

Nvidia driver以及Docker各有好幾種安裝方式,稍微繁雜了點,還有許多的函式庫如cuda以及cudnn,在這邊簡短紀錄一下如何在docker環境中使用GPU跑Tensorflow,如果你還在找要怎麼裝,看這篇就對囉。

Ubuntu版本:20.04

GPU:Nvidia RTX 3080

Tensorflow版本:v2.8

測試日期:2022年3月

目錄

事前準備

- Ubuntu版本18.04以上

- 已安裝最新版本的Docker

- 如果還沒安裝docker,這裡有我寫的快速在Ubuntu安裝docker

- 如果還沒安裝docker,這裡有我寫的快速在Ubuntu安裝docker

安裝Nvidia Driver

先不用裝cuda以及cudnn

-

移除舊的driver

sudo apt-get purge nvidia* sudo apt-get autoremove sudo apt-get autoclean sudo rm -rf /usr/local/cuda* -

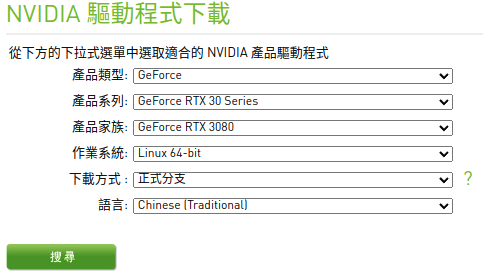

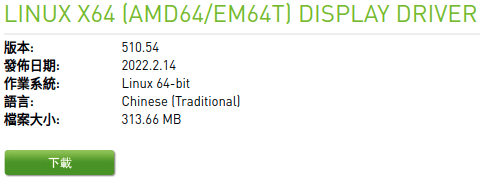

Nvidia官網上面查詢符合目前GPU的驅動版本

以我這台為例是版本510,後面小數點的不用記,我們不在這下載,用apt install比較快。

-

安裝Nvidia driver

$ sudo apt update$ sudo apt install nvidia-utils-"剛剛找出來的版號" $ sudo apt install nvidia-driver-"剛剛找出來的版號" # example RTX4090 $ sudo apt install nvidia-utils-535 $ sudo apt install nvidia-driver-535 -

重新開機

$ sudo reboot -

確認安裝成功

$ nvidia-smi +-----------------------------------------------------------------------------+ | NVIDIA-SMI 510.47.03 Driver Version: 510.47.03 CUDA Version: 11.6 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |===============================+======================+======================| | 0 NVIDIA GeForce ... Off | 00000000:01:00.0 On | N/A | | 66% 52C P8 39W / 370W | 180MiB / 10240MiB | 3% Default | | | | N/A | +-------------------------------+----------------------+----------------------+

安裝NVIDIA Container Toolkit

在docker container中使用gpu加速運算必須安裝container toolkit

-

設定repository

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg \ && curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | \ sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | \ sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list \ -

安裝nvidia-container

$ sudo apt-get update $ sudo apt-get install -y nvidia-container-toolkit -

設定 Docker 識別 NVIDIA Container Runtime

sudo nvidia-ctk runtime configure --runtime=docker -

重啟 Docker

$ sudo systemctl restart docker -

測試是否安裝成功

$ sudo docker run --rm --runtime=nvidia --gpus all nvidia/cuda:11.6.2-base-ubuntu20.04 nvidia-smi出現GPU的訊息就表示安裝成功

+-----------------------------------------------------------------------------+ | NVIDIA-SMI 450.51.06 Driver Version: 450.51.06 CUDA Version: 11.0 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |===============================+======================+======================| | 0 Tesla T4 On | 00000000:00:1E.0 Off | 0 | | N/A 34C P8 9W / 70W | 0MiB / 15109MiB | 0% Default | | | | N/A | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+

在Docker環境中使用GPU跑Tensorflow

-

拉一個image下來

$ sudo docker pull tensorflow/tensorflow:latest-gpu -

使用GPU run起來,會進入到docker的環境裡面

$ sudo docker run --gpus all -it tensorflow/tensorflow:latest-gpu bash -

直接import tensorflow確認

#進入到python console $ python>>> import tensorflow as tf >>> tf.config.list_physical_devices('GPU')下面訊息確認有抓到GPU

>>> tf.config.list_physical_devices('GPU') 2022-03-08 07:08:03.310660: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:936] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero 2022-03-08 07:08:03.310865: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:936] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero 2022-03-08 07:08:03.310996: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:936] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero [PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')]

以上項目就可以確認在docker環境中可以使用GPU跑Tensorflow,有任何疑問或是問題可以在下面留言我會儘快回覆。

Docker課程推薦

如果Docker在你的工作環境需要長時間使用的,或是你對於虛擬化容器的技術有興趣,我推薦Maximilian在Udemy教學網站上面的課程,有一個多小時的免費預覽,特價時只需要三百多台幣,就可以有20多個小時的學習內容,可以學到最新的容器化技術。

Docker相關文章

Reference

- Docker | TensorFlow

- 下載最新官方 NVIDIA 驅動程式

- Installation Guide – NVIDIA Cloud Native Technologies documentation

- tf.test.is_gpu_available | TensorFlow Core v2.8.0